Translate this page into:

Evaluating artificial intelligence’s role in lung nodule diagnostics: A survey of radiologists in two pilot tertiary hospitals in China

*Corresponding author: You Wu, Institute for Hospital Management, School of Medicine, Tsinghua University, Beijing, China. youwu@tsinghua.edu.cn

-

Received: ,

Accepted: ,

How to cite this article: Liu W, Wu Y, Zheng Z, Yu W, Bittle MJ, Kharrazi H. Evaluating artificial intelligence’s role in lung nodule diagnostics: A survey of radiologists in two pilot tertiary hospitals in China. J Clin Imaging Sci. 2024;14:31. doi: 10.25259/JCIS_72_2024

Abstract

Objectives:

This study assesses the perceptions and attitudes of Chinese radiologists concerning the application of artificial intelligence (AI) in the diagnosis of lung nodules.

Material and Methods:

An anonymous questionnaire, consisting of 26 questions addressing the usability of AI systems and comprehensive evaluation of AI technology, was distributed to all radiologists affiliated with Beijing Anzhen Hospital and Beijing Tsinghua Changgung Hospital. The data collection was conducted between July 19, and 21, 2023.

Results:

Of the 90 respondents, the majority favored the AI system’s convenience and usability, reflected in “good” system usability scale (SUS) scores (Mean ± standard deviation [SD]: 74.3 ± 11.9). General usability was similarly well-received (Mean ± SD: 76.0 ± 11.5), while learnability was rated as “acceptable” (Mean ± SD: 67.5 ± 26.4). Most radiologists noted increased work efficiency (Mean Likert scale score: 4.6 ± 0.6) and diagnostic accuracy (Mean Likert scale score: 4.2 ± 0.8) with the AI system. Views on AI’s future impact on radiology careers varied (Mean ± SD: 3.2 ± 1.4), with a consensus that AI is unlikely to replace radiologists entirely in the foreseeable future (Mean ± SD: 2.5 ± 1.1).

Conclusion:

Radiologists at two leading Beijing hospitals generally perceive the AI-assisted lung nodule diagnostic system positively, citing its user-friendliness and effectiveness. However, the system’s learnability requires enhancement. While AI is seen as beneficial for work efficiency and diagnostic accuracy, its long-term career implications remain a topic of debate.

Keywords

Artificial intelligence

Radiology

Radiologist

Lung nodule

Survey

System usability scale

INTRODUCTION

Artificial intelligence (AI) technology has become increasingly ubiquitous in the health-care sector, finding applications in various domains such as medical record management, screening, and diagnosis.[1] The diagnostic system, aided by AI, employs deep learning, image segmentation, and data mining technologies to accurately identify and quantify lesions in medical image data from X-rays, computed tomography (CT) scans, ultrasounds, and magnetic resonance imaging’s (MRIs). This functionality serves as a valuable diagnostic tool for physicians, providing them with a robust foundation for their diagnostic assessments.[2,3] It finds widespread applications across various contexts, including but not limited to the examination of lung, breast, and cardiovascular systems.[4-6]

The perceptions of radiologists hold considerable sway over the integration of AI into clinical practice. Globally, the existing literature reveals a spectrum of viewpoints among radiologists regarding AI products. While some radiologists exhibit a favorable outlook toward technological advancements in AI, they contend that AI systems possess the potential to significantly mitigate the incidence of misdiagnoses and omissions.[7] In addition, they assert that AI can enhance the overall efficiency and quality of their services, fostering an environment conducive to the acquisition of new skills and the implementation of transformative changes in their professional practices.[7-9] Conversely, radiologists may encounter challenges in placing trust in AI systems and integrating them into their routine diagnostic processes.[9] Furthermore, radiologists harbor apprehensions regarding potential job insecurity stemming from the advent of AI and may exhibit a proclivity to resist the widespread adoption of AI technologies.[10]

Despite the inherent diversity in functionalities and attributes of distinct AI systems, there exists a paucity of research that focuses explicitly on the user experience correlated with the utilization of these specific AI systems. In a study conducted in the United States, it was observed that the detection rate of lung nodules has shown a consistent year-on-year increase.[11] This underscores the potential of early lung nodule screening to afford opportunities for the timely diagnosis and subsequent treatment of lung cancer.[11] The utilization of AI systems for the detection of lung nodules has garnered widespread adoption in China.[12] Its functionality encompasses the detection and precise identification of lung nodules, mitigating the possibility of missed diagnoses and facilitating their accurate classification as either benign or malignant.[13] Nevertheless, radiologists may possess varying perspectives on their experiences with this technology.

This study was undertaken to assess the usability scores of the AI-aided diagnostic system for lung nodules among Chinese radiologists. In addition, we sought to examine their attitudes and perceptions toward AI technology in tertiary hospital settings.

MATERIAL AND METHODS

Survey development

The radiology departments at Beijing Anzhen Hospital (Anzhen) and Beijing Tsinghua Changgung Hospital (Changgung) jointly administered an online survey titled “ Survey on Radiologists” Experience with AI-Assisted Lung Nodule Diagnosis Systems’ [Supplementary Figure 1]. Participants in the study comprised all radiologists affiliated with Beijing Anzhen Hospital and Tsinghua Changgung Hospital. The survey participants included radiologists of varying levels, ranging from residents to professors, and encompassing all levels of seniority at two hospitals. The research sample is the most comprehensive and complete representation of radiologists in tertiary hospitals located in developed cities in China. The questionnaire consisted of 26 questions, and respondents were estimated to require approximately 5 min to complete it. The Institutional Review Board approval was not deemed necessary for this study. This study is conducted on an anonymous basis, with all participants having provided signed informed consent forms.

The survey was structured into three distinct subparts. The initial segment comprised eight questions focused on respondents’ demographic information, including age, gender, affiliated hospital, years of professional experience, professional title, years of experience with AI, AI system-assisted subspecialty diagnosis, and AI-practiced techniques [Table 1]. Notably, no personal identifying data were collected in this section. The second part of the survey utilized a system usability scale (SUS) questionnaire consisting of ten questions designed to assess the usability of the AI system.[14] The SUS is a widely recognized instrument employed in studies evaluating system usability. It features a combination of positively framed statements for odd-numbered items and negatively framed statements for even-numbered items. The third part consisted of eight questions probing participants’ sentiments and predictions regarding the integration of AI applications into radiological practice, both at present and in the forthcoming 5–10 years. The questionnaire, encompassing the second and third sections, comprised a total of 18 questions, employing a five-point Likert scale ranging from “completely disagree” (score: 1) to “completely agree” (score: 5).

| Question number | Topic | Answers | |

|---|---|---|---|

| Maximum number | List | ||

| I | Age range | 1 | 18–29 years, 30–39 years, 40–49 years, 50–59 years, 60–69 years, ≥70 years |

| II | Gender | 1 | Male, Female |

| III | Affiliated hospital | 1 | Beijing Anzhen Hospital, Beijing Tsinghua Changgung Hospital |

| IV | Working years | 1 | 0–3 years, 3–5 years, 5–10 years, 10–15 years, 15–20 years, ≥20 years |

| V | Professional title | 1 | Professor, Associate Professor, Attending Physician, Resident Physician, Intern |

| VI | AI experience years | 1 | 0–1 years, 1–2 years, 2–3 years, 3–4 years, 4–5 years, ≥5 years |

| VII | Subspecialty diagnosis | 12 | Lung, Breast, Cardiovascular, Cerebrovascular, Orthopedics, Abdominal, Ophthalmology, Radiotherapy, Interventional Radiology, Pediatrics, Pediatric, Urogenital |

| VIII | AI-practiced techniques | 7 | X-ray, CT, MRI, PET, Ultrasound, DSA, Optical Imaging |

AI: Artificial intelligence, CT: Computed tomography, MRI: Magnetic resonance imaging, PET: Positron emission tomography, DSA: Digital subtraction angiography

AI-aided diagnostic system

At Beijing Anzhen Hospital, the Care.ai system,[15] developed by Yitu Technology, was implemented in January 2019. This system possesses the capacity to autonomously detect and recognize lung nodules, providing detailed annotations encompassing size, location, density, and imaging characteristics of identified nodules, ultimately generating comprehensive diagnostic reports.

Meanwhile, Beijing Tsinghua Changgung Hospital adopted the Dr.Wise system in June 2021.[16] Developed by Deep-wise Inc., the Dr. Wise system operates as a computer-aided detection and diagnosis system. It is engineered to autonomously and precisely detect and segment both solid and ground-glass lung nodules, subsequently conducting automated calculations for parameters such as nodule diameter, density, volume, mass, volume doubling time, and mass doubling time.[16]

Statistical analysis

Quantitative variables were represented in the form of means, accompanied by standard deviations (SDs), and their respective ranges. Questions of categorical variables were expressed as proportions and percentages in relation to the total number of responses. Selected variables including gender, age range, geographical location, years of professional experience, professional title, and years of experience with AI were predetermined and included in subgroup analyses. Questions related to “AI practiced techniques besides lung nodule” and “AI system-assisted subspecialty diagnosis besides lung nodule” were designed as multiple-choice questions and analyzed through ranking methods. The cumulative SUS score obtained from the second part of the questionnaire was scored and converted to a scale of 0–100.[17] Statistical analyses of the results were carried out using RStudio (Version R 4.1.2), and graphical representations were generated using Excel 2019 software. P < 0.05 was considered statistically significant.

RESULTS

Participant demographics

The demographic characteristics of the participants are presented in Table 2. A total of 90 valid responses were collected, comprising 57.78% female and 42.22% male participants. The largest cohort (37.78%) fell within the 30–39 age bracket, closely followed by the 18–29 age group (28.89%) and the 40–49 age group (21.11%). Regarding the workplace, 26 participants (28.89%) were affiliated with Beijing Tsinghua Changgung Hospital, while 64 participants (71.11%) were associated with Beijing Anzhen Hospital. Notably, nearly half of the respondents possessed over a decade of experience in this field. Moreover, a substantial portion held the professional title of “resident physician.”

| Variable | Radiologists (n=90) | |

|---|---|---|

| n | % | |

| Sex | ||

| Male | 38 | 42.22 |

| Female | 52 | 57.78 |

| Age (year) | ||

| 18–29 | 26 | 28.89 |

| 30–39 | 34 | 37.78 |

| 40–49 | 19 | 21.11 |

| 50–59 | 10 | 11.11 |

| 60–69 | 1 | 1.11 |

| Working Hospital | ||

| Tsinghua Changgung | 26 | 28.89 |

| Anzhen | 64 | 71.11 |

| Working Years | ||

| 0–3 | 26 | 28.89 |

| 3–5 | 7 | 7.78 |

| 5–10 | 16 | 17.78 |

| 10–15 | 16 | 17.78 |

| 15–20 | 3 | 3.33 |

| ≥20 | 22 | 24.44 |

| Professional title | ||

| Professor | 13 | 14.44 |

| Associate professor | 18 | 20.00 |

| Attending physician | 23 | 25.56 |

| Resident physician | 28 | 31.11 |

| Intern | 8 | 8.89 |

Table 3 displays the results from utilizing the AI-assisted diagnostic system. As for experience utilizing AI systems, there was notable diversity, with the highest percentage of participants (22.22%) falling within the 1–2 years category.

| Variable | Radiologists (n=90) | |

|---|---|---|

| n | % | |

| AI experience years | ||

| 0–1 | 10 | 11.11 |

| 1–2 | 20 | 22.22 |

| 2–3 | 17 | 18.89 |

| 3–4 | 11 | 12.22 |

| 4–5 | 16 | 17.78 |

| >5 | 16 | 17.78 |

| AI practiced techniques | ||

| X-ray | 34 | 37.78 |

| CT | 79 | 87.78 |

| MRI | 13 | 14.44 |

| PET | 1 | 1.11 |

| DSA | 1 | 1.11 |

| Optical Imaging | 1 | 1.11 |

| Used AI system | ||

| Lung | 44 | 48.89 |

| Breast | 52 | 57.78 |

| Orthopedics | 28 | 31.11 |

| Cardiovascular | 69 | 76.67 |

| Abdominal | 9 | 10.00 |

| Cerebrovascular | 34 | 37.78 |

| Interventional radiology | 1 | 1.11 |

| Pediatrics | 1 | 1.11 |

AI: Artificial intelligence, CT: Computed tomography, MRI: Magnetic resonance imaging, PET: Positron emission tomography, DSA: Digital subtraction angiography

Alongside lung nodule screening, the most frequently utilized AI techniques among participants included CT (79, 87.87%), followed by X-ray (34, 37.78%), and MRI (13, 14.44%). Furthermore, the AI systems most commonly employed were those focused on cardiovascular applications (69, 76.67%), followed by breast-related AI systems (52, 57.78%), and lung-related AI systems (44, 48.89%).

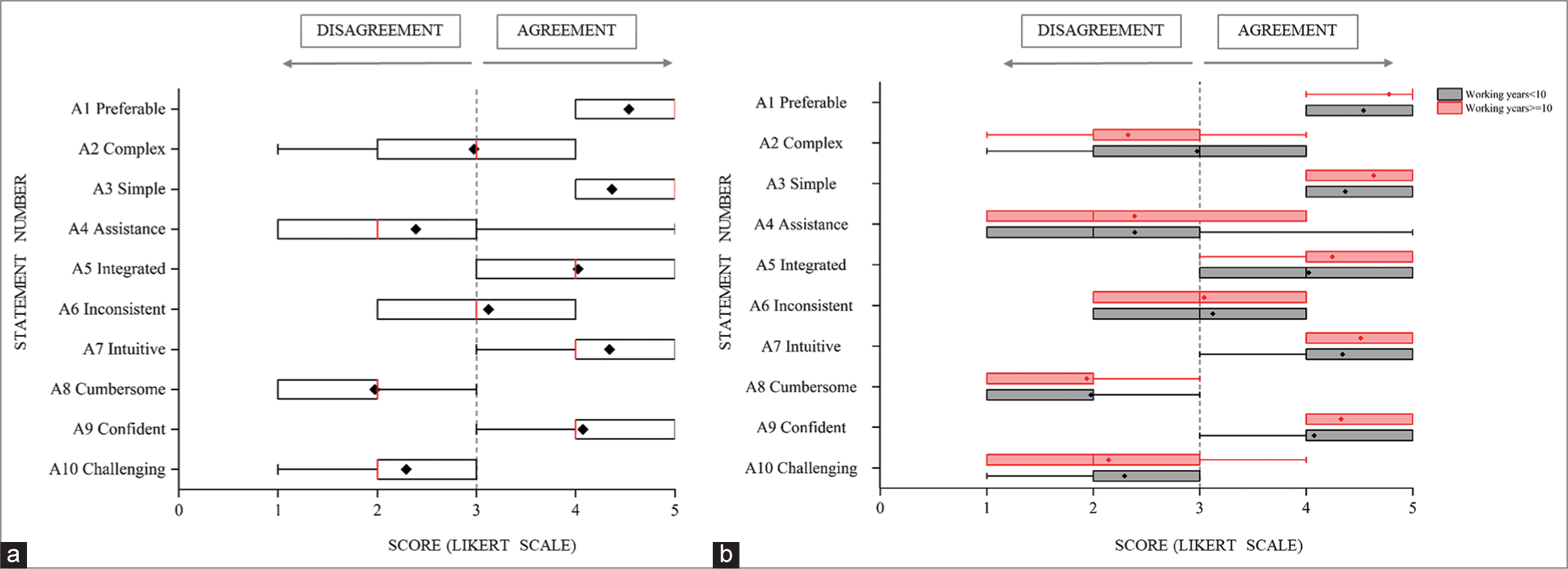

AI SUS

Figure 1 presents the outcomes of the AI SUS. In general, radiologists reported that the AI system was convenient and user-friendly. SUS scores spanned from 45.0 to 100.0, with an average score of 74.3 (SD 11.9), signifying a “good” level of usability.[18] Specifically, for the dimensions of usability (comprising 8 items) and learnability (comprising 2 items),[19] usability scores were “good” (76.0 ± 11.5 [SD]), and learnability scores were “OK” (67.5 ± 26.4 [SD]).

- Artificial intelligence system usability scale (a) system usability scale (SUS) scores in all radiologists. (b) SUS scores in radiologists whose working years <10 and working years ≥10. Box plots and whisker plots show the distribution of responses using a Likert scale ranging from 1 to 5. The vertical bar represents the median value; the 10th and 90th percentiles are set as whiskers limits and the 25th and 75th as box limits; the mean value is plotted as a “•.”

Furthermore, within the subgroup analysis, radiologists with fewer years of experience tended to yield higher SUS scores (working years < 10: 76.6 ± 12.0 [SD], working years ≥10: 71.5 ± 11.3 [SD]; P < 0.05) and higher usability scores (working years <10: 78.7 ± 10.9 [SD], working years ≥10: 72.7 ± 11.6 [SD]; P < 0.05). Notably, no significant differences were observed in learnability scores between these two subgroups (working years <10: 68.4 ± 28.2 [SD], working years ≥10: 66.5 ± 24.3 [SD]).

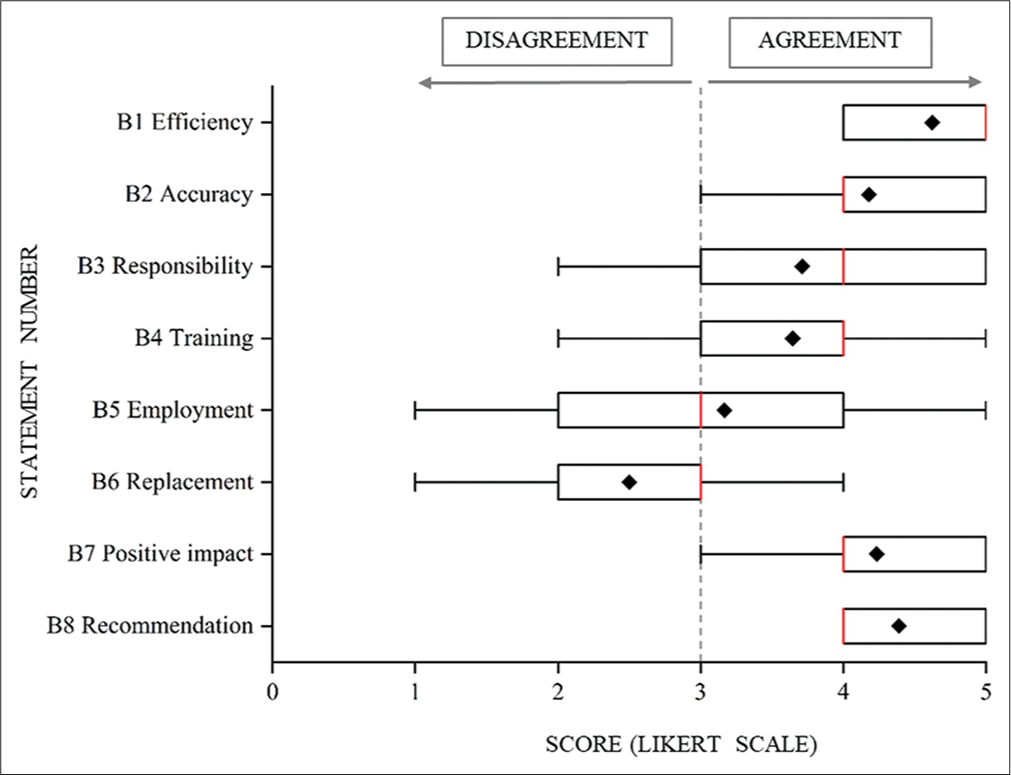

A comprehensive evaluation of AI technology

Figure 2 illustrates the outcomes of the comprehensive evaluation of AI technology. Radiologists reported that the AI system had a positive impact on their work, with mean Likert scale scores indicating an improvement in work efficiency (4.6 ± 0.6 [SD], range: 2–5) and enhanced diagnostic accuracy (4.2 ± 0.8 [SD], range: 2–5).

- A comprehensive evaluation of artificial intelligence technology Box plots and whisker plots show the distribution of responses using a Likert scale ranging from 1 to 5. The vertical bar represents the median value; the 10th and 90th percentiles are set as whiskers limits and the 25th and 75th as box limits; the mean value is plotted as a “•.”

Radiologists expressed their preferences regarding the allocation of responsibility for diagnostic errors when AI systems are in use, with an average Likert scale score of 3.7 ± 1.4 (SD), indicating a preference that the responsibility should primarily rest with the radiologist (range: 1–5). In addition, they conveyed a perception that they lacked sufficient training in imaging AI, as evidenced by an average Likert scale score of 3.6 ± 1.1 (SD) (range: 1–5). Radiologists held varying opinions on the potential impact of AI systems on the employment opportunities of imaging physicians in the next 5–10 years, with a tendency toward a mild agreement (average Likert scale score: 3.2 ± 1.4 [SD], range: 1–5). Moreover, radiologists exhibited a tendency to mildly disagree with the notion that medical imaging AI technology would ultimately replace radiologists in the long run (average Likert scale score: 2.5 ± 1.1 [SD], range: 1–5).

Radiologists unanimously acknowledged the potential positive impact of medical imaging AI technology on the imaging department over the next 5 years, with a mean Likert scale score of 4.2 ± 0.8 (SD) (range: 2–5). Furthermore, they demonstrated a strong inclination to endorse the use of AI systems to their peers, as reflected by an average Likert scale score of 4.4 ± 0.7 (SD) (range: 2–5).

DISCUSSION

Acceptable SUS scores

Our study delved into the assessment of system usability scores among radiologists concerning the AI-aided diagnostic system designed for lung nodules. This system operates by processing post-CT examination images, recognizing segmented images, and detecting nodules. The radiologists from both hospitals displayed a positive attitude toward the AI system as a whole. The usability scores were consistently rated as “good,” reflecting radiologists’ confidence in the system’s potential for frequent application and its ease of use. However, the perspectives on system consistency exhibited a degree of divergence. Furthermore, our study underscores the paramount importance of evaluating critical data characteristics, such as data quality and consistency, when integrating AI-assisted tools into clinical practice. These considerations are accompanied by a myriad of legal, ethical, and clinical acceptability issues that merit meticulous examination in the deployment of such technologies.[20] The system-providing company is required to perform debugging and deployment tailored to the specific needs of each hospital, with continuous updates aimed at enhancing system consistency. Furthermore, particular emphasis should be placed on incorporating feedback from radiologists into the system’s design, ensuring that it aligns with their preferences and facilitates ease of use. Notably, our findings indicated that radiologists with fewer years of experience exhibited higher usability scores, highlighting their increased receptivity toward AI systems. This underscores the importance of considering the level of acceptability among radiologists when implementing AI technologies.[21]

The learnability scores were rated as “OK,” indicating that radiologists perceived the system as reasonably trainable. However, radiologists also expressed a common opinion that they lacked sufficient training in the field of imaging AI. This underscores the importance of equipping radiologists with the requisite knowledge and awareness to facilitate their seamless adaptation to the system.[8,22] Waymel et al. revealed that radiologists exhibited a deficiency in pre-existing knowledge and information pertaining to AI.[7] Proficiency in advanced AI expertise can significantly augment the extent of AI’s clinical utilization, while a rudimentary understanding of AI can potentially hinder radiologists’ effective application of the technology.[10] The absence of structured training frequently necessitates radiologists to engage in self-directed learning or resort to commercial videos and product manuals provided by software vendors to acquire the necessary knowledge and usage skills. This approach could present challenges as radiologists may not fully grasp the software’s functionality and applicability, which could potentially lead to an overestimation of the product’s performance.

Necessary decision support

Although the sample size is limited, our study provides in-depth insights, particularly regarding the impact of AI technology on the professional attitudes of radiologists. This aspect of the research is highly significant which, yet, remains underexplored. The AI system has exerted a beneficial influence on imaging departments, underlining the importance of its widespread adoption. Radiologists hold the belief that AI has played a pivotal role in enhancing their work efficiency and diagnostic accuracy. These favorable perspectives align closely with findings from prior research studies.[23,24] AI serves as a valuable aid to junior radiologists in the diagnostic process, and its role in enhancing healthcare, particularly in underdeveloped regions, is of important significance.[25] It is conceivable that junior radiologists may more readily embrace and adeptly utilize AI software compared to their senior counterparts. Nevertheless, the advantages of AI for senior radiologists appear to be constrained. The task of labeling false-positive results may result in increased physician workload and additional stress for patients.[26]

The viewpoints of radiologists concerning the potential impact of AI on their future employment exhibit a complex feature. The evolution of AI confronts a multitude of challenges, including issues of uncertainty and non-interpretability, which preclude exclusive reliance on AI for medical diagnosis. Consequently, radiologists have assumed an indispensable role in light of these circumstances.[27] Ideally, the integration of AI should commence within the medical curriculum from the outset of medical students’ education. This early exposure allows students to enhance their learning, acclimatize to future work environments, and cultivate a lasting sense of confidence in this evolving technology.[28]

Limitations

Our study is subject to several limitations. First, the survey’s representativeness could be improved. We exclusively targeted radiologists from two tertiary hospitals, Beijing Anzhen Hospital, and Beijing Tsinghua Changgung Hospital, potentially limiting the generalizability of our findings to other health-care institutions. It is noteworthy that although these two hospitals had merged into the same company at the time of the survey, the AI systems they employed were developed by distinct companies, which introduces a degree of heterogeneity. In future research, we plan to increase the sample size to validate and expand on the current findings, particularly concerning the impact of AI on the future profession of radiologists. Second, the distribution of the survey by the heads of the imaging department to all radiologists may have introduced social desirability bias, as radiologists might have been inclined to provide positive evaluations due to this hierarchical relationship. Third, it is important to acknowledge that our study primarily elucidates overarching trends within the surveyed population. The considerable variance observed in the responses to each question underscores the persisting diversity in opinions among individual radiologists regarding this subject. In light of this diversity, future research may benefit from supplementing our findings with in-depth interviews to obtain a more comprehensive understanding of the intricate perspectives held by radiologists on this topic.

CONCLUSION

Among radiologists working in two tertiary hospitals in Beijing, the AI-assisted diagnostic system dedicated to lung nodule diagnosis received a “good” rating on the SUS, indicating their favorable perception of its user-friendliness and suitability for frequent use. However, there is room for improvement in learnability scores, particularly in system design and integration areas. While the majority of radiologists recognize the positive impact of AI systems on their work efficiency and diagnostic accuracy, there is a diversity of opinions regarding the potential influence of AI on career prospects within the field of radiology.

Ethical approval

No Institutional Review Board (IRB) approval was deemed necessary for this study, as it entailed a voluntary survey conducted among radiology professionals, did not involve any health-related information, and ensured the complete anonymization of all collected data. Participants were explicitly informed that the data would be handled anonymously and might be utilized for scientific publication purposes.

Declaration of patient consent

Patient’s consent is not required as there are no patients in this study.

Conflicts of interest

There are no conflicts of interest.

Use of artificial intelligence (AI)-assisted technology for manuscript preparation

The authors confirm that there was no use of artificial intelligence (AI)-assisted technology for assisting in the writing or editing of the manuscript and no images were manipulated using AI.

Supplementary material available on:

Financial support and sponsorship

Nil.

References

- Health intelligence: How artificial intelligence transforms population and personalized health. NPJ Digit Med. 2018;1:53.

- [CrossRef] [PubMed] [Google Scholar]

- Deep learning: A primer for radiologists. Radiographics. 2017;37:2113-31.

- [CrossRef] [PubMed] [Google Scholar]

- A survey on deep learning in medical image analysis. Med Image Anal. 2017;42:60-88.

- [CrossRef] [PubMed] [Google Scholar]

- Artificial intelligence and its future potential in lung cancer screening. EXCLI J. 2020;19:1552-62.

- [Google Scholar]

- Breast cancer detection using artificial intelligence techniques: A systematic literature review. Artif Intell Med. 2022;127:102276.

- [CrossRef] [PubMed] [Google Scholar]

- Artificial intelligence in precision cardiovascular medicine. J Am Coll Cardiol. 2017;69:2657-64.

- [CrossRef] [PubMed] [Google Scholar]

- Impact of the rise of artificial intelligence in radiology: What do radiologists think? Diagn Interv Imaging. 2019;100:327-36.

- [CrossRef] [PubMed] [Google Scholar]

- Canadian association of radiologists white paper on artificial intelligence in radiology. Can Assoc Radiol J. 2018;69:120-35.

- [CrossRef] [PubMed] [Google Scholar]

- Artificial intelligence: Radiologists' expectations and opinions gleaned from a nationwide online survey. Radiol Med. 2021;126:63-71.

- [CrossRef] [PubMed] [Google Scholar]

- An international survey on AI in radiology in 1,041 radiologists and radiology residents part 1: Fear of replacement, knowledge, and attitude. Eur Radiol. 2021;31:7058-66.

- [CrossRef] [PubMed] [Google Scholar]

- Recent trends in the identification of incidental pulmonary nodules. Am J Respir Crit Care Med. 2015;192:1208-14.

- [CrossRef] [PubMed] [Google Scholar]

- Chinese Experts consensus on artificial intelligence assisted management for pulmonary nodule (2022 version) Zhongguo Fei Ai Za Zhi. 2022;25:219-25.

- [Google Scholar]

- Artificial intelligence: A critical review of applications for lung nodule and lung cancer. Diagn Interv Imaging. 2023;104:11-7.

- [CrossRef] [PubMed] [Google Scholar]

- SUS-A quick and dirty usability scale In: Usability evaluation in industry. USA: Taylor and Francis; 1996. p. :189-94.

- [Google Scholar]

- A novel deep learning-based quantification of serial chest computed tomography in coronavirus disease 2019 (COVID-19) Sci Rep. 2021;11:417.

- [CrossRef] [PubMed] [Google Scholar]

- Long-term follow-up of persistent pulmonary pure ground-glass nodules with deep learning-assisted nodule segmentation. Eur Radiol. 2020;30:744-55.

- [CrossRef] [PubMed] [Google Scholar]

- Determining what individual SUS scores mean: Adding an adjective rating scale. J Usability Stud. 2009;4:114-23.

- [Google Scholar]

- The factor structure of the system usability scale. Vol 5619. Berlin: Springer; 2009. p. :94-103.

- [CrossRef] [Google Scholar]

- Machine learning in whole-body MRI: Experiences and challenges from an applied study using multicentre data. Clin Radiol. 2019;74:346-56.

- [CrossRef] [PubMed] [Google Scholar]

- Technology acceptance theories and factors influencing artificial Intelligence-based intelligent products. Telemat Inform. 2020;47:101324.

- [CrossRef] [Google Scholar]

- Attitudes toward artificial intelligence in radiology with learner needs assessment within radiology residency programmes: A national multi-programme survey. Singapore Med J. 2021;62:126-34.

- [CrossRef] [PubMed] [Google Scholar]

- Association of artificial intelligence-aided chest radiograph interpretation with reader performance and efficiency. JAMA Netw Open. 2022;5:e2229289.

- [CrossRef] [PubMed] [Google Scholar]

- Introduction of human-centric AI assistant to aid radiologists for multimodal breast image classification. Int J Hum Comput Stud. 2021;150:102607.

- [CrossRef] [Google Scholar]

- The added value of an artificial intelligence system in assisting radiologists on indeterminate BI-RADS 0 mammograms. Eur Radiol. 2022;32:1528-37.

- [CrossRef] [PubMed] [Google Scholar]

- Assessment of an artificial intelligence aid for the detection of appendicular skeletal fractures in children and young adults by senior and junior radiologists. Pediatr Radiol. 2022;52:2215-26.

- [CrossRef] [PubMed] [Google Scholar]

- Causability and explainability of artificial intelligence in medicine. Wiley Interdiscip Rev Data Min Knowl Discov. 2019;9:e1312.

- [CrossRef] [PubMed] [Google Scholar]

- Influence of artificial intelligence on Canadian medical students' preference for radiology specialty: A national survey study. Acad Radiol. 2019;26:566-77.

- [CrossRef] [PubMed] [Google Scholar]